Edge is a new hub of services. For telcos, it is a new way to monetize and innovate. The edge is a gateway to telco’s new business avenues, from broadband to gaming, AR/VR to AI/ML services.

However, telcos always face a common challenge with the edge, i.e., how to design it to scale well. Perhaps they would like to scale as the cloud providers scale their DCs.

One way to scale is to add more servers when growth is needed. Still, with the limited space and power on edge, this strategy of continuous stacking is neither scalable nor economical.

Another preferred strategy is to look for smarter servers and scale with the smart servers. This way, they can get more throughput and compute per RU.

So it is essential to consider how the servers are built up, i.e., what kind of components are used inside the server.

For example, a CPU ( or a GPU) holds a central processing role when it comes to the role of different components. However, leaving all processing to the CPU puts a lot of stress on its scalability. This is especially true for new AI/ML-based services requiring much processing and throughput. Instead, thanks to technological advancements, some of the processing can be offloaded to the networking components today, making the server more scalable.

And we think PCI Express Gen 5 and SmartNIC are two of the features in a server that can help here, bringing more scalability with an optimum offload to the networking layer.

What is PCI Express Gen 5?

PCI Express ( also called PCIe board) is one of the most critical components that can facilitate efficient and high bandwidth transfer rates in a server.

Before we discuss what PCI Express is and, in particular, PCI Express Gen 5, let’s ask what PCI is.

PCI is an abbreviation for Peripheral Component Interconnect. It is a bus that facilitates adding components to a desktop computer. For example, CPUs, GPUs, sound cards, and SSDs are connected to this bus so that they can exchange data through this bus.

Now you may ask why a “bus” is so important.

In fact, a bus is like a gateway in a server; it can be an enabler or bottleneck for efficient communication within a server. Every component that needs to reach the processing unit has to use that bus, so the more efficient the bus architecture is, the more efficient the server will perform.

PCI has a long history. Over the years, PCIe standards have evolved.

PCI Express (PCIe) superseded PCI, which was the original PCI standard that provided a data rate of 133. Mbps. The PCIe offers better performance, throughput, and error detection capabilities. PCIe sits in the PCIe slot.

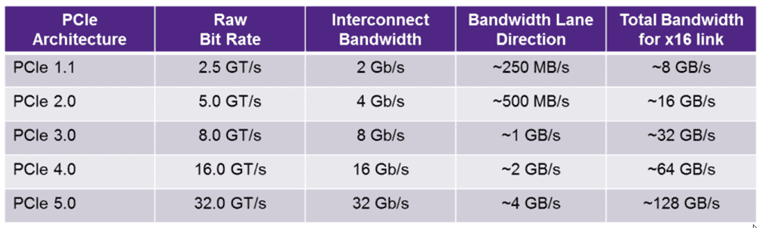

PCIe 1.1 was introduced in 2005 and only supported 0.25 Gbps for a single lane, versus PCIe 5.0, introduced in 2019, which supported 4 Gbps for a single lane with an aggregate total bandwidth of 128 GB/s for 16 lanes in the PCIe bus.

PCIe evolution: Ref Synopsis

PCIe evolution: Ref Synopsis

So what does this evolution in PCIe mean for servers?

The earlier generations of PCIe boards were insufficient to handle the high throughput demands of the AI/ML processing that happens in GPUs today. GPU needs a very bandwidth-efficient bus. That is where PCI Express with multi lanes can help!

PCIe lanes are physical links between the peripheral and the processor ( CPU or GPU). The more lanes, the more efficiently the processor can communicate simultaneously with the peripherals resulting in high total throughput. The PCIe cards are available with variable express lanes options like four, eight, and even sixteen lanes.

PCI Express lanes are indispensable for today’s high throughput communication and result in better performance. For example, running a graphics card without PCIe lanes would result in degraded performance.

While PCIe facilitates communication inside the server, another component can facilitate communication of the server to the outside world, i.e., smartNIC.

SmartNIC

Before we discuss SmartNIC, would it make sense to know what NIC is?

What is NIC?

NIC stands for Network Interface Card. NIC allows the server to communicate with the external world. But with smartNIC, one can achieve much more than just communication with the external world. They can help in many other compute-related tasks, as explained below:

What is SmartNIC?

SmartNIC is a particular type of NIC card with accelerators and is optimized for better latency, encryption, and packet loss. They can do multiple network functions, such as load balancing and telemetry for network traffic.

Beyond networking ( where it functions as a network adapter), smartNICs can offload some of the storage and security functions ( firewall, etc.) from the processor, thus freeing up the processor for other compute functions. For example, smartNICs can be storage controllers for SSDs and HDDs in DC servers.

SmartNIC benefits

With the smartNIC, one can have networking, storage, and security functions in one component, reducing inventory and bringing down CAPEX investment.

On edge, where it is desired to have more integrated and smart servers, it really makes sense to have smartNICs in the servers.

How is SmartNIC built? Types of SmartNIC

There are multiple ways to implement SmartNIC:

FPGA-based—FPGA offers high flexibility of ability to be programmed and can accelerate network functions in a way the programmer requires.

System on Chip ( SoC) based– P4 or C/C++makes it possible to have a programmable data plane on the NIC which can be adapted to accelerate and add new network functions on the NIC

ASICs (application-specific integrated circuits) based: Fixed function ASICs can help implement smartNIC functions and thus offload some of the security and storage functions

Drawbacks of smartNIC

SmartNIC does come with specific limitations like

- Expensive than regular NICs

- No standards on smarNICs

- The flexibility and complexity of smartNIC also mean sometimes a feature development may take a longer time.

PCI Express Gen 5 with smartNIC can open the doors for innovation and efficiency.

With the edge DCs where the space and power are already limited, having a smart server architecture can bring greater efficiency for intra-server and inter-server/switch communication. Having a server with a highly efficient PCI bus will ensure that the CPU/GPU can deliver its optimum performance, and with the security and storage functions offload to the smartNIC, the CPU/GPU can be freed up to use its resources for more intensive workloads such as AI/ML, etc.

Lanner’s edge servers with PCIe and smartNIC

Lanner’s Falcon H8 is a PCIe form factor edge server designed to reduce CPU loading for deep learning inference. It accommodates a standard PCIe interface, which enables legacy devices to run video-intensive Edge AI applications such as video analytics, traffic management, and access control with the best performance.

To meet cloud and NFV demand, Virtual Extensible LAN (VXLAN) is used to create virtual networks that transfer data over IP infrastructure. The challenge of scaling SDN/NFV deployments can be solved using a SmartNIC, which can offload VXLAN functions from the CPU, thereby increasing CPU efficiency. Lanner’s FX-3230 server appliance, with an Ethernity Networks’ ACE-NIC SmartNIC, connected through PCI Express, is a good solution to solve this problem.

About Lanner and Whitebox Solutions

Lanner is a leading manufacturer of white box and uCPE solutions. It provides cutting-edge smart white boxes and uCPEs for telecom applications like SDN, NFV, RAN, SD-WAN, and orchestration.

Lanner operates in the US through its subsidiary Whitebox Solutions.

References:

https://pediaa.com/what-is-the-difference-between-pci-and-pci-express/

https://developer.nvidia.com/blog/choosing-the-best-dpu-based-smartnic/

https://codilime.com/blog/what-are-smartnics-the-different-types-and-features/